The most recent iteration of Anthropic’s GenAI technology, Claude, was unveiled today. The AI startup is supported by hundreds of millions in venture capital, and perhaps hundreds of millions more shortly. Furthermore, the startup asserts that its performance is comparable to OpenAI’s GPT-4.

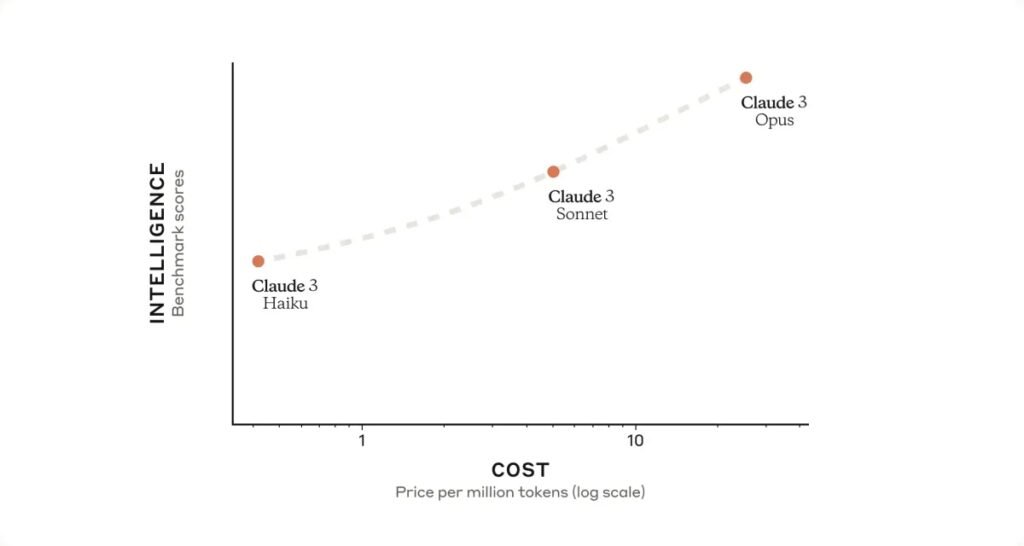

Claude 3, the new GenAI from Anthropic, is made up of three models: C Claude 3 Haiku, C Claude 3 Sonnet, and C Claude 3 Opus, the most potent of which is Opus. Anthropic says that all demonstrate “increased capabilities” in analysis and forecasting, along with improved performance on particular benchmarks compared to models such as Google’s Gemini 1.0 Ultra (but not Gemini 1.5 Pro) and GPT-4 (but not GPT-4 Turbo).

Notably, Claude 3 is Anthropic’s first multimodal GenAI, which means that, like some variants of GPT-4 and Gemini, it can evaluate text in addition to images. In addition to processing drawings from PDFs, slideshows, and other document types, Claude 3 can handle images, charts, graphs, and technical diagrams.

In the first step, Claude 3 can evaluate up to 20 photos in a single request, which is better than some of its GenAI competitors. According to Anthropic, this enables it to compare and contrast photos.

However, Claude 3’s image processing has certain limitations.

Because Anthropic is probably concerned about the moral and legal ramifications, it has disabled the models’ ability to identify people. Additionally, the business acknowledges that Claude 3 has problems with tasks involving spatial reasoning (reading an analog clock face, for example) and object counting (Claude 3 cannot precisely count the number of things in an image). It also makes mistakes with “low-quality” images (less than 200 pixels).

Additionally, Claude 3 won’t produce any art. For the time being at least, the models are limited to picture analysis.

Compared to its predecessors, Anthropic claims that users can generally anticipate Claude 3 to follow multi-step directions better, generate structured output in formats like JSON, and speak in languages other than English when handling text or images. Because Claude 3 has a “more nuanced understanding of requests,” Anthropic suggests that Claude 3 should decline to respond to inquiries less frequently. Shortly, the models will provide a reference for their responses to queries so that users can confirm them.

Claude 3 typically elicits more thoughtful and animated replies, according to Anthropic’s support article. In contrast to our older models, it is simpler to prompt and guide. It should be possible for users to attain the intended outcomes with briefer and more precise prompts.

The broader context of Claude 3 is responsible for several of those enhancements.

The input data (such as text) that a model takes into account before producing output is referred to as the context, or context window, of the model. Small context windows cause models to “forget” the details of even recent discussions, which causes them to stray from the subject—often in dangerous ways. An additional benefit is that, at least theoretically, large-context models can produce responses that are more contextually rich and more accurately capture the narrative flow of the material they process.

According to Anthropic, Claude 3 will initially support a context window of 200,000 tokens, or roughly 150,000 words. A context window of one million tokens, or around 700,000 words, will be available to a select few clients. That is comparable to the recently released Gemini 1.5 Pro model from Google, which similarly provides a context window with up to a million tokens.

Claude 3 isn’t flawless, even though it is an improvement over its predecessor.

Anthropic acknowledges in a technical whitepaper that bias and hallucinations—or making things up—are problems that other GenAI models face with Claude 3. Claude 3 is not a web search engine like some other GenAI models; instead, it can only respond to queries utilizing data collected before to August 2023. Despite being bilingual, Claude is less proficient than English in a few “low-resource” languages.

However, Anthropic has assured Claude 3 that there will be regular updates in the upcoming months.

The business states in a blog post that “we don’t believe that model intelligence is anywhere near its limits, and we plan to release [enhancements] to the Claude 3 model family over the next few months.”

Opus and Sonnet are currently accessible via the web, Google’s Vertex AI, Amazon’s Bedrock platform, and Anthropic’s dev console and API. Later this year, haiku will be the next.

The price breakdown is as follows:

- $15 million for input tokens and $75 million for output tokens is Opus.

- $3 million in input tokens and $15 million in output tokens for Sonnet

- Haiku: $0.25 for every million tokens received, $1.25 for every million tokens produced

That brings us to Claude 3. What, though, is the overall picture from 30,000 feet?

The goal of Anthropic is to develop a cutting-edge algorithm for “AI self-teaching.” An algorithm like this might be used to create virtual assistants, some of which we’ve already seen in action with huge language models like GPT-4, that can respond to emails, conduct research, create art, novels, and more.

In the previously stated blog post, Anthropic suggests this, stating that it intends to add features to Claude 3 that will improve its capabilities right out of the box by enabling Claude to code “interactively,” communicate with other systems, and provide “advanced agentic capabilities.”

The last sentence reminds me of OpenAI’s purported goals of creating a software agent that can automate complicated processes, such as importing data from a document into a spreadsheet or completing expense reports and entering them into accounting software. Developers may already incorporate “agent-like experiences” into their apps via OpenAI’s API, and it appears Anthropic intends to provide capabilities along those lines.

Is Anthropic going to release another picture generator soon? To be honest, I would be surprised. These days, image generators are highly controversial, mostly due to bias and copyright issues. Google’s image generator was recently forced to be turned off after absurdly adding variety to images without considering their historical context. Additionally, several image generator suppliers are engaged in legal disputes with artists who claim they are making money off of their creations by using GenAI to train on their work without giving credit or even remuneration.

I’m interested in following the development of Anthropic’s “constitutional AI” training method, which it says improves the predictability, understandability, and ease of making necessary adjustments to the behavior of its GenAI. By utilizing a limited number of guiding principles, constitutional AI models are designed to answer questions and carry out activities in a way that is consistent with human objectives. Anthropic stated, for instance, that it incorporated a principle—based on feedback from the public—to Claude 3 that tells the models to be considerate of and accessible to individuals with disabilities.

Anthropic is in it for the long run, whatever its ultimate goal may be. The startup wants to raise as much as $5 billion over the next 12 months or so, according to a pitch deck that was leaked in May of last year. This amount may be the minimum it needs to be competitive with OpenAI. (After all, training models isn’t cheap.) With pledges and committed funding of $2 billion and $4 billion from Google and Amazon, respectively, and well over a billion from additional investors combined, it’s well on its way.