After making its major premiere yesterday, Google’s new Gemini AI model is receiving mixed reviews. However, users may lose faith in the company’s technology or ethics after learning that the most spectacular Gemini demo was essentially staged.

It’s easy to understand why a video titled “Hands-on with Gemini: Interacting with multimodal AI” received one million views in the last 24 hours. The striking demonstration “highlights some of our favorite interactions with Gemini,” demonstrating the multimodal model’s adaptability and responsiveness to various inputs. The multimodal model is capable of understanding and combining linguistic and visual knowledge.

It starts by telling the story of a duck sketch that progresses from a scribble to a finished design, which it claims is an unrealistic color. It then shows amazement (as in “What the quack!”) upon finding a toy blue duck. Next, the demo goes on to other show-off actions, such as following a ball in a cup-switching game, identifying shadow puppet gestures, rearranging planet designs, and so forth. It then reacts to various vocal inquiries regarding that toy.

Even if the video warns that “latency has been reduced and Gemini outputs have been shortened,” everything is still incredibly responsive. They so avoided a pause here and an elaboration there and succeeded in getting it. Overall, it was an awe-inspiring demonstration of power in the field of multimodal comprehension. I was skeptical that Google could produce a competitor, but after seeing the hands-on, I changed my mind.

There is only one issue: the video is fake. “To test Gemini’s skills on a variety of difficulties, we recorded footage to build the demo. Next, we used still image frames from the video and text prompts to prompt Gemini. It was Parmy Olson of Bloomberg who initially brought attention to the disparity.

Thus, while it may be able to perform some of the tasks that Google demonstrates in the movie, it was unable to do so in real-time or as suggested. It was a sequence of precisely calibrated text prompts accompanied by still images that were purposefully truncated and chosen to distort the true nature of the interaction. To be fair, the video description includes a link to a connected blog post where you may view some of the actual prompts and comments, albeit below the “… more.”

Gemini appears to have produced the responses seen in the video, on the one hand. Who would also mind seeing some housekeeping instructions, such as instructing the model to clear its cache? However, the speed, accuracy, and basic method of interacting with the model are misrepresented to spectators.

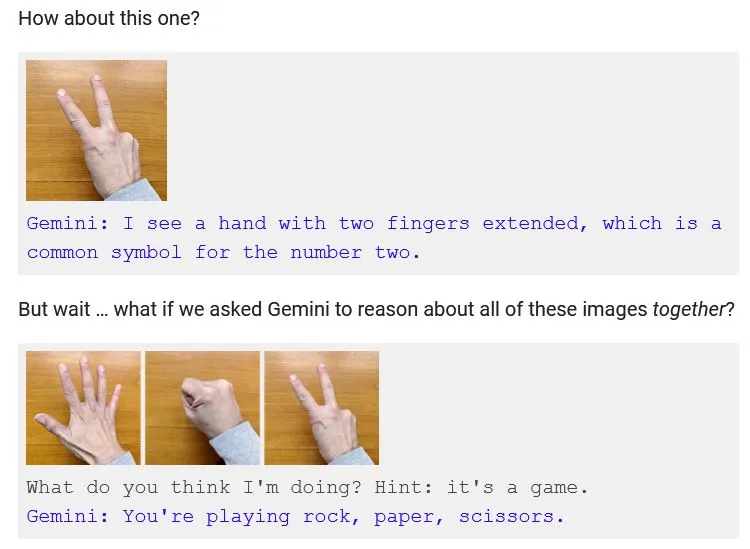

For example, at 2:45 in the video, a hand is shown moving silently in several different ways. In a flash, Gemini answers, “I know what you’re doing! It’s a game of Rock, Paper, Scissors!

However, the model’s inability to make decisions based just on observing particular motions is the first thing mentioned in the capability’s description. All three gestures need to be displayed simultaneously and prompted: “What do you suppose I’m up to? It’s a game, as a hint. “You’re playing rock, paper, scissors,” it replies.

These don’t feel like the same interaction, despite their similarities. They seem like essentially different interactions: one is a wordless, intuitive assessment that quickly conveys an abstract concept, and the other is an engineered, extensively indicated encounter that highlights both its limitations and its strengths. Instead of the former, Gemini performed the latter. There was no “interaction” as depicted in the video.

Afterward, the surface is covered in three sticky notes that have drawings of the sun, Saturn, and Earth on them. “Is this the right sequence?” “No, the proper order is Sun, Earth, and Saturn,” responds Gemini. True! However, the question in the real (written) prompt is, “Is this the correct order? Explain your thinking while taking the sun’s distance into account.

Was Gemini right on this one? Or did it make a mistake and require some assistance to come up with a response suitable for a video? Did it require assistance with the planets as well, or did it recognize them at all?

In the video, a ball of paper is moved beneath a cup, and the model recognizes and follows this movement with apparent ease. Not only must the task be described in the post, but it also has to train the model to complete it (even if swiftly and with natural language). And so forth.

You may or may not think these examples are insignificant. Ultimately, it’s very amazing how rapidly a multimodal model can recognize hand motions as a game! And so is deciding whether or not a partially completed picture is a duck! But now that the blog post doesn’t explain the duck sequence, I’m starting to question the accuracy of that exchange as well.

Since we expect videos like these to be part real and half aspirational, no one would have blinked an eye if the video had declared at the outset, “This is a stylized representation of interactions our researchers tested.”

However, the title of the video, “Hands-on with Gemini,” suggests that the interactions we witness are the ones they choose to highlight. They weren’t. There were moments when they seemed to have happened at all, moments when they were far more involved, and moments when they were completely different. We’re not even told which model it is—the Ultra model that will be available in the upcoming year, or the Gemini Pro model that users may use right now.

When Google gave us this description, should we have thought that they were just providing us with a taster video? Then maybe we should presume that all the capabilities shown in Google AI demos are inflated for dramatic effect. I state that this video was “faked” in the headline. I wasn’t sure at first if this strong language was appropriate (certainly not for Google; I was requested to alter it by a representative). However, the video just does not reflect reality, even though it has some actual aspects. It’s not real.

The statement from Google states that “we made a few edits to the demo (we’ve been upfront and transparent about this),” which is untrue, and that the video “shows real outputs from Gemini,” which is true. It’s not truly a demo, and the interactions in the video differ greatly from those that were used to make it.